Your basket is currently empty!

Author: admin

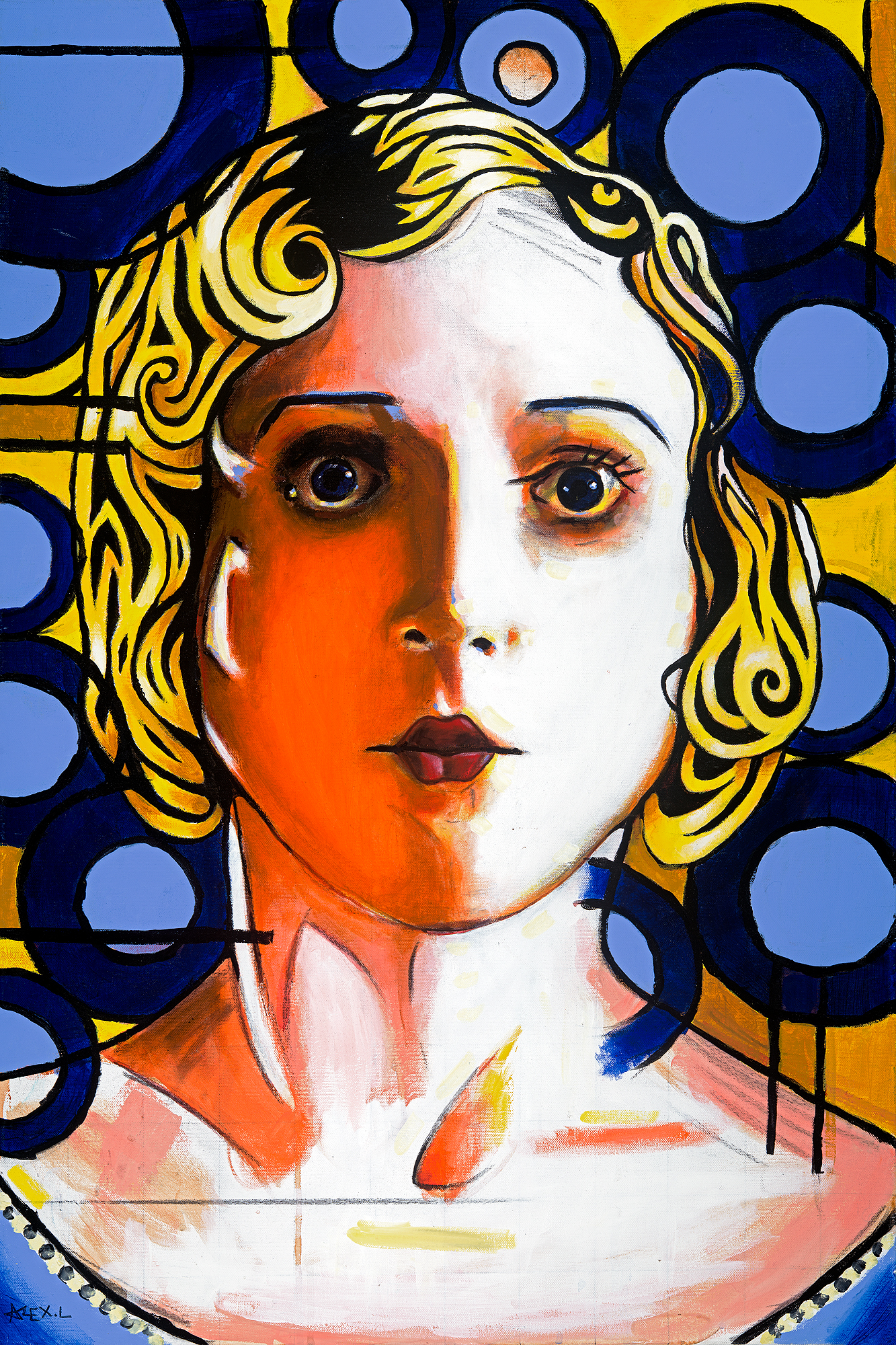

The Seeds of Division by Alex Loveless (2018)

Acrylic on Canvas. 60cm x 90cm. Click Here to Buy Prints

The Will of the People by Alex Loveless (2018)

Acrylic on Canvas. 60cm x 90cm Click Here to Buy Prints

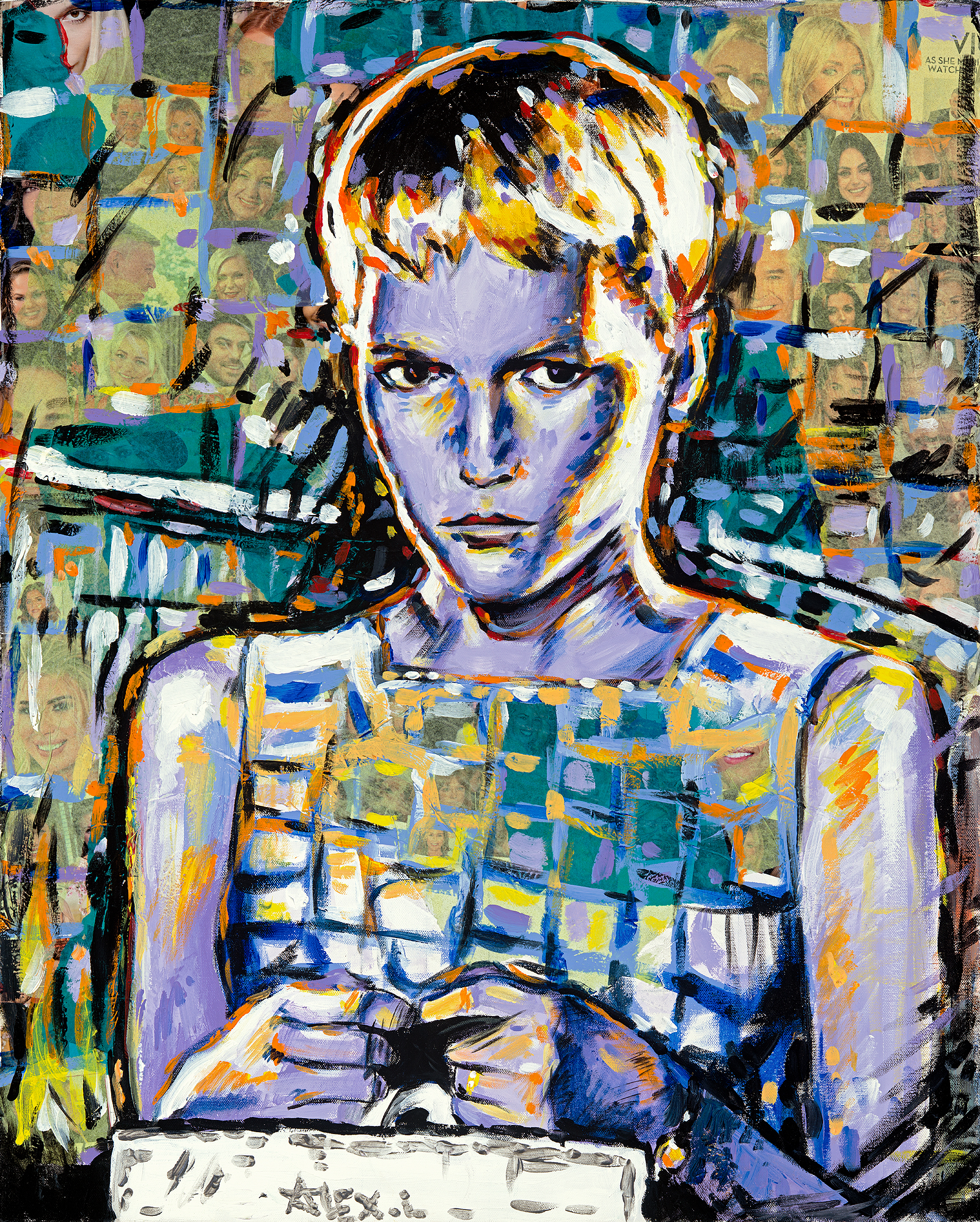

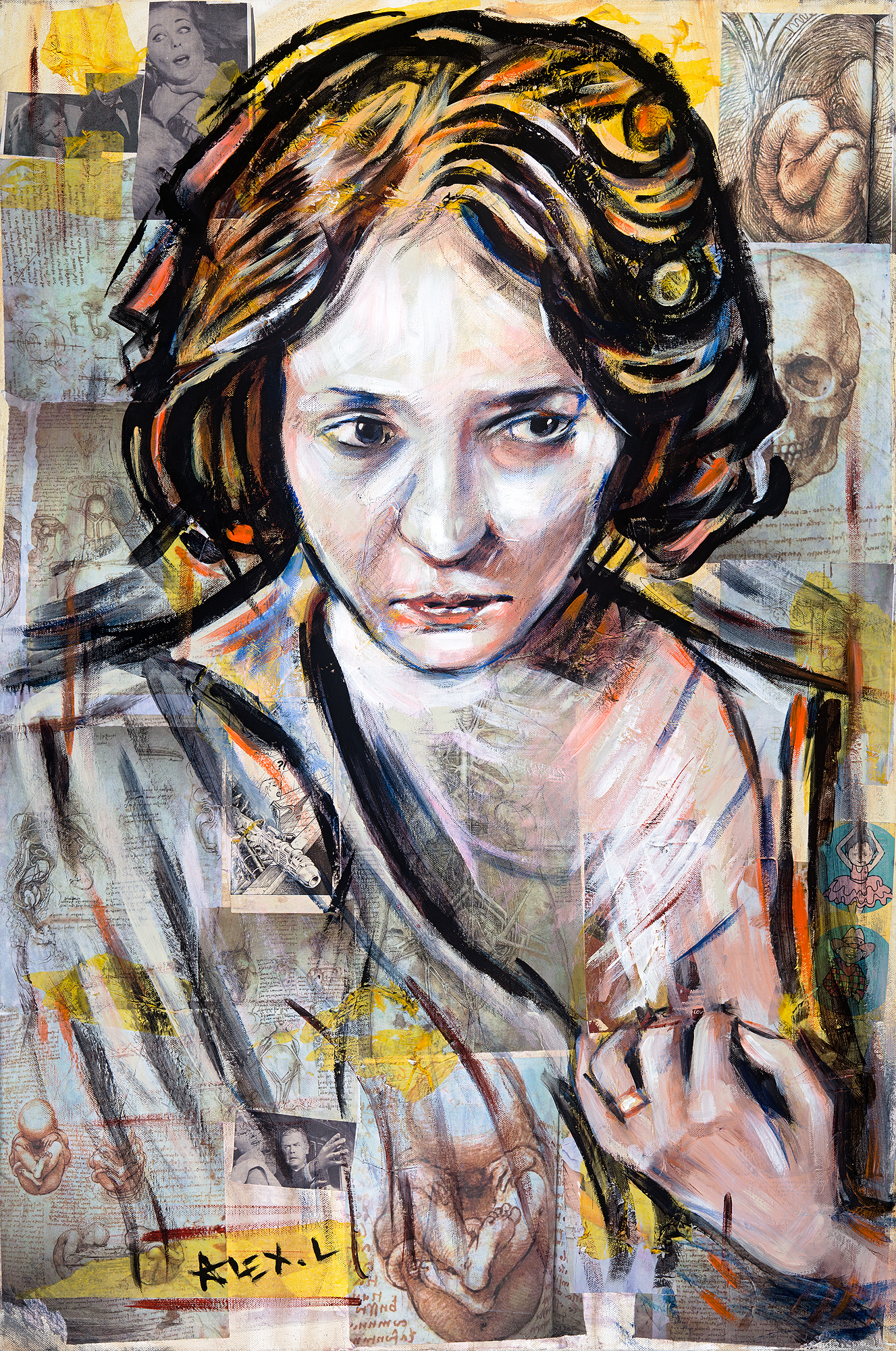

Somebody by Alex Loveless (2018)

Click Here to Buy Prints Somebody by Alex Loveless. Acrylic and Mixed Media on Canvas. 60cm x 90cm.

Ordinary by Alex Loveless (2018)

Acrylic on Canvas. 70cm x 90cm. Click Here to Buy Prints

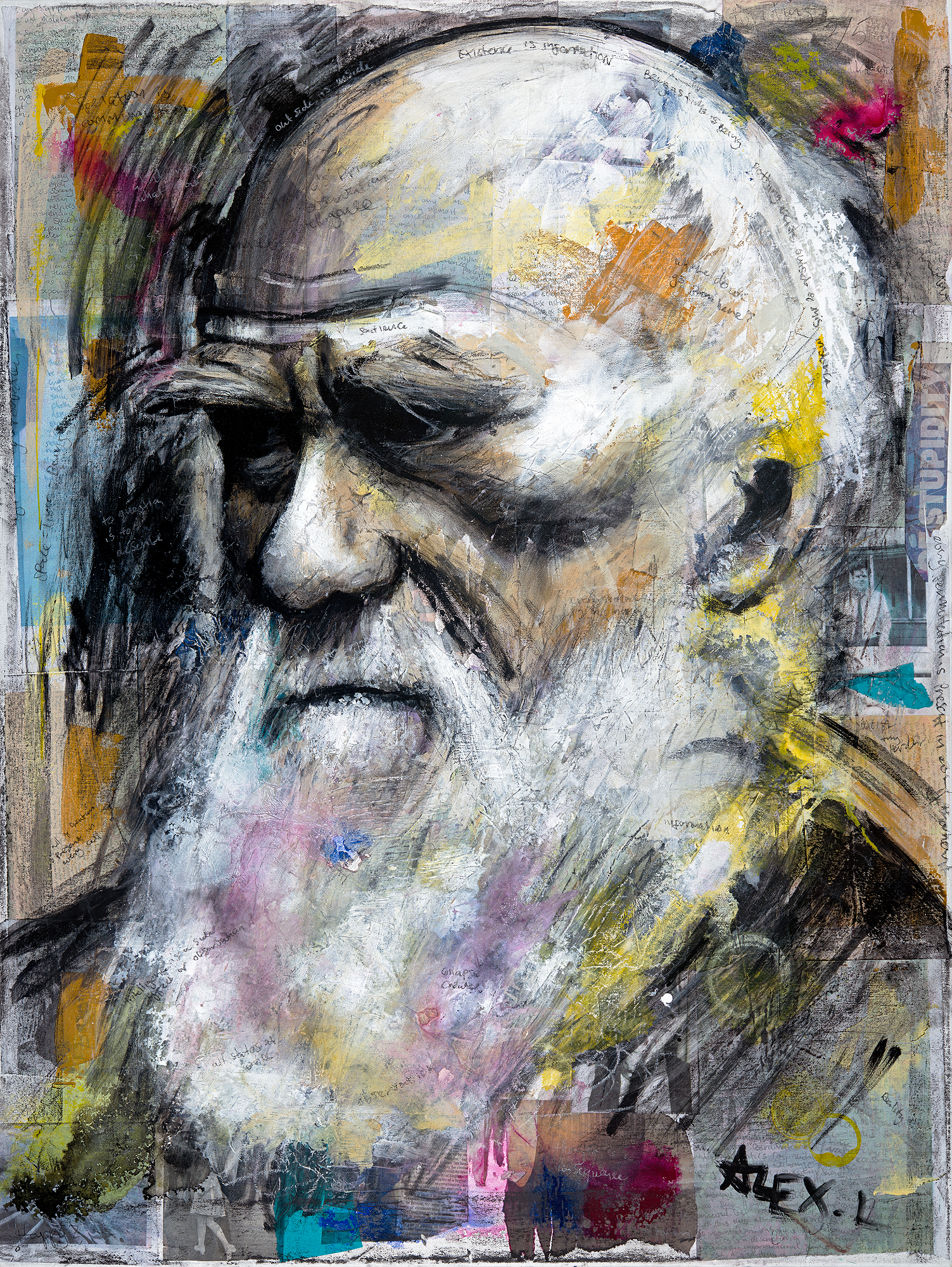

Where Do We Go From Here? by Alex Loveless (2019)

Acrylic and Mixed Media on Canvas. 30in x 40in. Click Here to Buy Prints

Human (5) by Alex Loveless (2019)

Acrylic and Mixed Media on Canvas. 60cm x 90cm. Click Here to Buy Prints

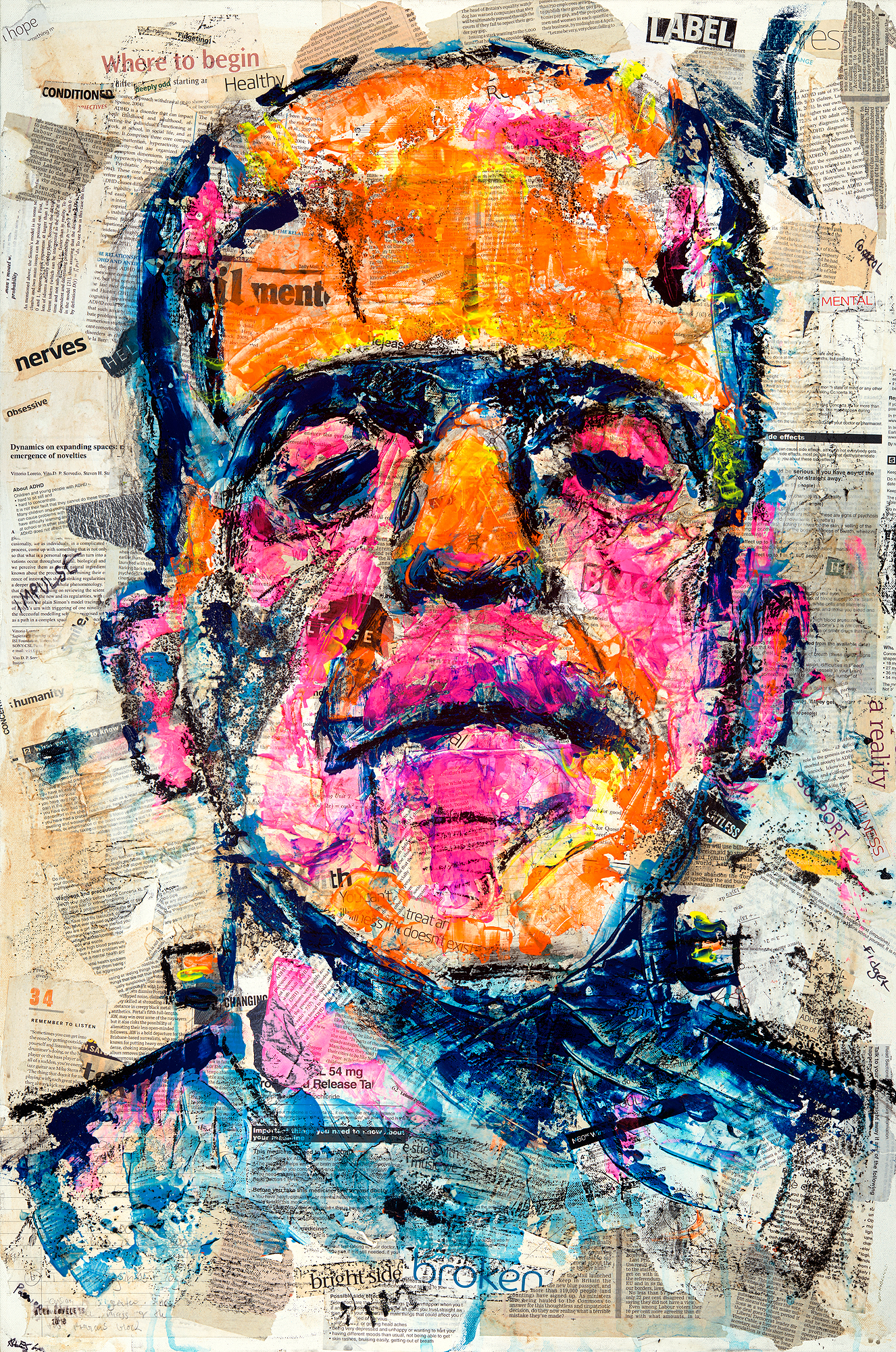

Plan? Nein. by Alex Loveless (2018)

Acrylic and Mixed Media on Canvas. 70cm x 90cm. Click Here to Buy Prints

What Makes Us Stronger

“What the hell are we supposed to do now?!” I asked, panicked and confused. A genuine question – what should a hiker do when posed with such a predicament? Having no experience, I genuinely didn’t know. The worried glances of my friends did nothing to calm me. They knew this was bad. “Get the…

Self Portrait with Texture

I’m struggling to pin down when this was produced. It was painted using as its reference a Polaroid picture (which I still have) circa 1998/9. I barely remember making this. By the look of it, there was originally a highly textured abstract piece on the board, which presumably I didn’t like. Or perhaps I just…

Spaceship #1

The theme here, if it’s not obvious, is the relationship between nature, randomness, mechanism and determinism. The title is not whimsical. The 6 cell motif that dominates the half of the painting is a representation of a single state of a cellular automaton, one of infinite number possible, from Conway’s Game of Life. This particular…

Manga Mannequin Considering Jumping Into the Abyss

Here is a picture of my Manga Mannequin stood staring into the abyss. He seems fearful, hesitant, afraid even. He also has no face to tell us this with. No voice and no story. These Manga Mannequins do suggest a story, or perhaps a character, or element of culture, or something like that. There is strength…

Finished

It won’t find it hard to believe that I find it difficult to focus when on conference calls. A random floating piece of dust catching a mote of sunlight is enough to draw me a away from the matter in hand. The ceaseless distraction that is the social web is like a black hole sucking…